Scaling ChatGPT Implementations: Infrastructure and Performance Optimization

Executive Summary

ChatGPT has emerged as a powerful language model with wide-ranging applications. However, scaling its implementation requires careful consideration of infrastructure and performance optimization. This article provides comprehensive insights into infrastructure setup, workload management, resource allocation, monitoring, and performance tuning techniques to ensure efficient and scalable ChatGPT deployments. By leveraging these strategies, organizations can maximize the potential of ChatGPT and harness its transformative capabilities.

Introduction

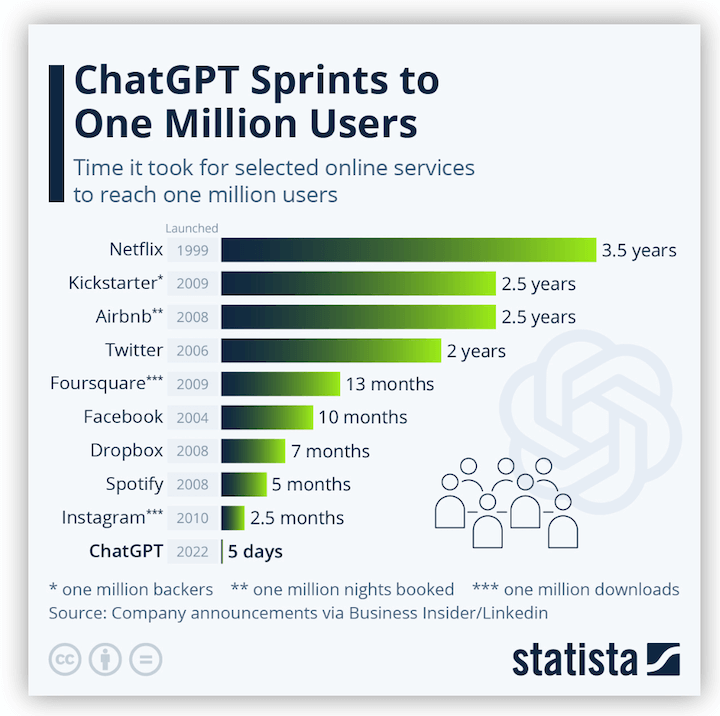

The rapidly expanding capabilities of ChatGPT have ignited a surge in interest in its implementation across various domains. However, scaling ChatGPT deployments presents unique challenges due to its demanding computational requirements. To ensure optimal performance and scalability, it is critical to adopt a holistic approach that encompasses both infrastructure optimization and efficient workload management. This article aims to provide a comprehensive guide to scaling ChatGPT implementations, empowering organizations to unlock its full potential and drive business value.

Scaling Infrastructure

Choosing the Right Hardware:

- High-Performance Processors: Intel Xeon Scalable processors or AMD EPYC processors provide exceptional computing power for large-scale ChatGPT deployments.

- Abundant Memory: Adequate RAM (128GB or more) ensures seamless handling of complex language models and datasets.

- Accelerated GPUs: NVIDIA RTX or AMD Radeon GPUs provide dedicated hardware acceleration for training and inference tasks.

Networking Infrastructure:

- High-Speed Connectivity: Leverage 10GbE or higher network connections for fast data transfer between servers and storage systems.

- Load Balancers: Employ load balancing mechanisms to distribute traffic across multiple servers and optimize resource utilization.

- Content Delivery Networks (CDNs): Utilize CDNs to cache static content and reduce latency for user requests.

Storage Considerations:

- High-Capacity Storage: Invest in large-capacity storage systems (e.g., NVMe SSDs) to accommodate vast training datasets and model checkpoints.

- Redundant Storage: Implement redundant storage configurations (e.g., RAID) to protect against data loss and ensure availability.

Workload Management

Containerization:

- Docker or Kubernetes: Utilize container technology to isolate and manage ChatGPT workloads, enabling portability and scalability.

- Resource Isolation: Configure resource limits (CPU, memory) for each container to prevent resource contention and performance degradation.

Job Scheduling:

- Slurm or PBS: Employ job schedulers to manage the execution of ChatGPT jobs, ensuring efficient workload handling and optimal resource utilization.

- Priority-Based Scheduling: Assign priorities to different jobs based on their criticality and time constraints to optimize performance.

Resource Allocation

Dynamic Resource Allocation:

- Kubernetes Autoscaling: Leverage Kubernetes autoscaling to automatically adjust resource allocation based on workload demand, optimizing server utilization and cost efficiency.

- Cluster Scaling: Scale out the cluster by adding additional nodes to meet increasing workload requirements, ensuring seamless performance.

Resource Monitoring:

- Prometheus or Grafana: Implement monitoring tools to track resource utilization (CPU, memory, storage), identify performance bottlenecks, and trigger proactive actions.

- Alerts and Notifications: Configure alerts to notify administrators of potential performance issues, enabling prompt intervention.

Performance Tuning

Model Optimizations:

- Model Pruning: Remove unnecessary parameters or layers from the ChatGPT model to reduce its size and improve inference time.

- Quantization: Convert model parameters to lower-precision formats (e.g., INT8) to reduce memory requirements and increase performance.

Code Optimizations:

- Code Profiling: Identify performance hot spots in the ChatGPT code using profiling tools, such as Python’s cProfile or memory profilers.

- Parallelization: Leverage Python’s multithreading or multiprocessing capabilities to parallelize code execution and improve throughput.

Conclusion

Scaling ChatGPT implementations requires a comprehensive approach that encompasses infrastructure optimization, efficient workload management, and performance tuning. By leveraging the strategies outlined in this article, organizations can maximize the capabilities of ChatGPT, unlock its full potential, and drive transformative business value. It is crucial to continuously monitor and tune the system to ensure optimal performance and scalability as the workload evolves and new challenges arise. By adopting a proactive and data-driven approach, organizations can harness the power of ChatGPT to innovate, automate tasks, and gain a competitive advantage in today’s rapidly evolving technological landscape.

Keyword Tags

- ChatGPT

- Infrastructure Optimization

- Performance Scaling

- Workload Management

- Resource Allocation