The Ethics Of Autonomous Weapons: Ai In Warfare

Executive Summary

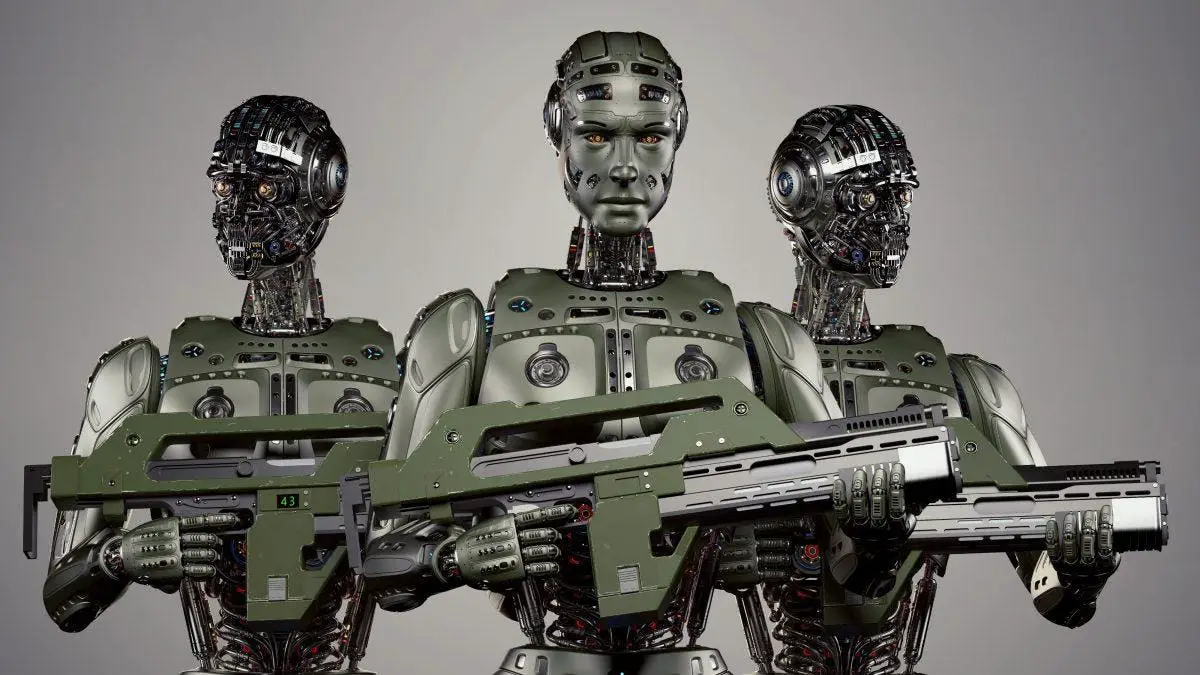

Autonomous weapons, also known as lethal autonomous weapons systems (LAWS) or “killer robots,” utilize artificial intelligence (AI) to make life-or-death decisions without human intervention. This raises profound ethical concerns:

-

Loss of Human Control: Moral responsibility for the use of lethal force becomes diffused and could erode accountability and oversight.

-

Unbiased Decision-Making: AI systems may struggle to fully comprehend complex moral dilemmas, potentially leading to unjust or discriminatory outcomes.

-

Transparency and Explainability: The opaque nature of AI algorithms raises transparency and explainability concerns, making it difficult to understand and assess the basis for weapon deployment decisions.

Introduction

As technology advances, the advent of AI is transforming warfare, and autonomous weapons are set to revolutionize the battlefield. However, the ethical implications of these weapons require careful consideration to ensure their responsible and ethical use. This article delves into the complexities of autonomous weapons, exploring key ethical concerns and subtopics.

FAQ

Q: What exactly are autonomous weapons?

A: Autonomous weapons, or “killer robots,” are weapons capable of selecting and engaging targets without human intervention. They rely on AI algorithms to analyze data, make decisions, and deploy lethal force.

Q: Why are these weapons controversial?

A: Concerns center around the potential for these weapons to erode human control over the use of lethal force, introduce bias or errors, and create challenges to transparency and accountability.

Q: Are there any international laws or treaties regulating autonomous weapons?

A: There is currently no definitive international consensus or specific treaty regulating the use of autonomous weapons. However, the Convention on Certain Conventional Weapons (CCW) has established a Group of Governmental Experts (GGE) for discussing and potentially regulating autonomous weapons.

Subtopics

1. Moral Responsibility and Accountability

-

Diffusion of Responsibility: The use of autonomous weapons can blur the lines of responsibility, making it unclear who is ultimately accountable for life-or-death decisions.

-

Erosion of Human Control: As weapons become more autonomous, the role of human judgment and oversight diminishes, potentially leading to a loss of meaningful human control.

-

Lack of Individual Culpability: Identifying and punishing responsible individuals may become complex, as decisions are made by AI systems, potentially undermining traditional notions of accountability.

2. Unbiased Decision-Making

-

Algorithmic Bias: AI algorithms may be biased, potentially leading to discriminatory or unjust outcomes. Autonomous weapons relying on such algorithms could amplify these biases in life-or-death situations.

-

Unpredictable Behavior: AI systems can exhibit unpredictable behavior, particularly in complex and chaotic environments. This unpredictability raises concerns about the reliability and safety of autonomous weapons.

-

Lack of Emotional Intelligence: AI systems lack emotional intelligence, making it difficult for them to fully understand the psychological and ethical complexities of combat, which could lead to disproportionate or unjust targeting.

3. Transparency and Explainability

-

Black Box Problem: AI algorithms often involve complex decision-making processes that are difficult to understand and explain. This lack of transparency makes it challenging to evaluate decisions and ensure accountability.

-

Explainability Gap: Even when efforts are made to explain AI decisions, it may not be easy for humans to fully comprehend the underlying reasoning, especially for complex algorithms.

-

Potential for Misuse: Non-transparent algorithms could be exploited by malicious actors to intentionally target specific groups or individuals, raising concerns about potential misuse and abuse.

4. International Legal and Regulatory Framework

-

Existing Laws and Treaties: The Geneva Convention and other international laws governing warfare may not adequately address the use of autonomous weapons, creating a need for specific regulations.

-

Lack of Consensus: There is currently no international consensus on how to regulate autonomous weapons, making it difficult to establish a comprehensive framework.

-

Arms Race Concerns: The development of autonomous weapons by one state may trigger an arms race, potentially destabilizing international relations.

5. Public Perception and Acceptance

-

Public Trust Concerns: The use of autonomous weapons could erode public trust in the ethics of warfare, especially if incidents or accidents occur.

-

Social Stigma: Autonomous weapons may carry a social stigma, affecting how they are perceived and used in society.

-

Psychological Impact: The use of autonomous weapons in warfare may raise psychological concerns, such as desensitization to violence or feelings of dehumanization.

Conclusion

The ethics of autonomous weapons is a complex and multifaceted issue that demands sustained attention and international collaboration. By engaging in thoughtful discussions and promoting responsible development, we can ensure that these technologies are used in a manner that upholds human dignity, promotes accountability, and respects the principles of justice and fairness in warfare.

Keyword Tags

- Lethal Autonomous Weapons Systems

- Artificial Intelligence and Warfare

- Ethics of Autonomous Weapons

- Moral Responsibility and Accountability

- International Law and Regulations