The Challenge of Explainable AI: Making Machines Understandable

Artificial intelligence (AI) has made tremendous strides in recent years, enabling machines to perform tasks that were once thought to be impossible. However, as AI systems become more complex, they also become more difficult to understand. This lack of explainability poses a significant challenge, as it makes it difficult to trust and rely on AI systems.

Why is Explainability Important?

Explainability is important for several reasons:

- Trust: People are more likely to trust and use an AI system if they can understand how it works and why it makes the decisions that it does.

- Reliability: Explainability can help to identify and fix errors in AI systems, making them more reliable.

- Accountability: When AI systems make mistakes, it is important to be able to explain why they made those mistakes. Explainability can help to identify the root cause of errors and prevent them from happening again.

- Safety: In some cases, AI systems can have a significant impact on people’s lives. For example, AI systems are used to make decisions about who gets loans, who gets jobs, and even who gets medical treatment. It is important to be able to explain how these decisions are made in order to ensure that they are fair and unbiased.

The Challenges of Explainability

There are a number of challenges associated with making AI systems explainable. Some of the most common challenges include:

- High Complexity: AI systems are often composed of multiple layers of interconnected components. This complexity makes it difficult to understand how the system works as a whole.

- Black Box Models: Some AI systems, such as deep neural networks, are known as “black box” models. This means that it is very difficult to understand how they make decisions.

- Data Bias: AI systems are often trained on data that is biased. This can lead to the system making decisions that are unfair or inaccurate.

- Lack of Human Knowledge: Sometimes, AI systems are making decisions that even humans do not understand. This can make it difficult to explain how the system is working.

Overcoming the Challenges

Despite the challenges, there is a growing body of research on explainable AI. Researchers are developing new methods and techniques for making AI systems more understandable.

Some of the most promising approaches to explainable AI include:

- Model Agnostic Explanations: These methods can be used to explain the predictions of any AI model, regardless of its complexity.

- Feature Importance: This technique can be used to identify the features that are most important in making a decision.

- Saliency Maps: These visualizations can show how the input data is being used to make a decision.

- Counterfactual Explanations: This technique can be used to generate examples of inputs that would have led to a different decision.

Conclusion

Explainable AI is a challenging problem, but it is also an important one. By making AI systems more understandable, we can increase trust, reliability, accountability, and safety. As AI continues to play a larger role in our lives, explainability will become increasingly important.# The Challenge Of Explainable AI: Making Machines Understandable

Executive Summary

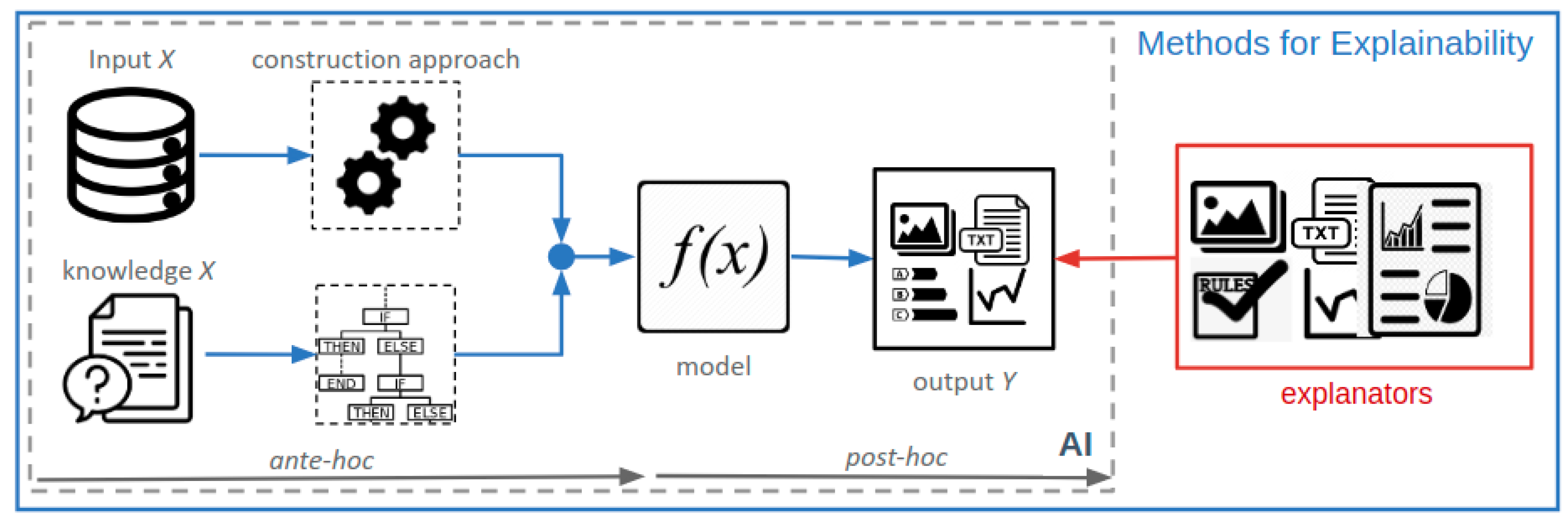

Explainable Artificial Intelligence (XAI) enables us to comprehend how AI systems arrive at their decision, making AI models transparent, comprehensible, and trustworthy. However, challenges still remain in developing XAI models.

Introduction

As AI takes on an increasingly significant role in our lives, ensuring that AI systems function as intended with transparent and fairly explainable reasoning is paramount. This article delves into the hurdles of explainable AI, unveiling the complexities, current capabilities, ongoing research, and future prospects for designing AI models that can provide meaningful explanations for their inferences.

Top 5 Subtopics

1). The Need for Interpretability in AI

Description: Understanding and interpreting the inner workings of AI systems are crucial for numerous reasons, including:,

- Building trust and confidence in AI systems.

- Uncovering biases and addressing ethical concerns.

- Facilitating the debugging and maintenance of AI systems.

- Enhancing human collaboration with AI systems.

2). Challenges in Developing XAI Models

Description: Designing XAI is challenging due to several factors:

- Black-box nature: Many AI models, particularly deep learning models, are inherently opaque making it difficult to understand their decision-making processes.

- Complex interactions: AI models may engage in intricate interactions, encompassing large quantities of data, leading to unpredictable behavior.

- High-dimensionality: AI models often work with immensely high-dimensional data, posing a barrier to understanding.

- Lack of shared mental models: Establishing common ground between human understanding and AI processes is a significant challenge.

3). Current Research Directions in XAI

Description: Ongoing research in XAI covers a broad spectrum of approaches, including.

- Model Agnostic Explanations: These techniques can explain any model by inspecting its input and output, making them universally applicable.

- Model-specific Explanations: These methods delve into the inner workings of a particular model, offering detailed insights into its decision-making processes.

- Human-Centered Explanations: This research area focuses on customizing explanations to suit the preferences and understanding level of individual users.

- Causal Explanations: To pinpoint the causal relationships between input features and outputs of an AI model, causal explanations employ techniques like intervention and counterfactuals.

4). Applications of Explainable AI

Description: XAI finds applications in numerous domains, including:

- Healthcare Industry: XAI can assist medical professionals in comprehending AI-driven diagnostic tools.

- Financial Sector: Explanations can help financial advisors understand and justify model-driven investment recommendations.

- Manufacturing Industry: XAI can clarify the rationale behind decisions made by AI-powered quality control systems.

- Legal Domain: Explainable AI models can support lawyers in deciphering complex legal documents and predicting case outcomes.

5). Ethical and Regulatory Considerations

Description: Ethical and regulatory issues surround the deployment of AI systems:

- Transparency and accountability: As AI systems are increasingly employed in decision-making processes, ensuring transparency and accountability is paramount.

- Bias and fairness: XAI can assist in detecting and mitigating bias, promoting fairness in AI systems, such as unbiased hiring algorithms.

- Privacy and security: Explainable AI can aid in safeguarding privacy and maintaining optimal ethical and regulatory compliances.

Conclusion

XAI is an evolving discipline, presenting both challenges and opportunities. To unlock the full potential of AI, we must strive to develop more explainable models. By breaking down the black box and shedding light on the decision-making processes of AI systems, we can build trust and confidence, address ethical concerns, and unlock new possibilities for human-AI collaboration.

Keyword Phrase Tags:

- Explainable Artificial Intelligence (XAI)

- Interpretability in AI

- Explainable Machine Learning

- AI Transparency

- Trustworthy AI

Nowadays, there are loads of sophisticated computer models that make decisions about people’s jobs, loans, and lives, but most such models are black boxes—they can make predictions, but they can’t explain how or why they reached a particular conclusion.

Why bother making AI explainable? Because humans are fundamentally curious, and we want to understand how systems like this make decisions.

Unveiling that black box would benefit us all, and not just for accountability’s sake. Explainable AI could help us understand why algorithms make erroneous conclusions and how to avoid such mistakes in the first place, giving us a greater ability to harness AI for good.

If we hope to deploy AI responsibly and ethically in the real world I believe to make machines understandable is crucial

What readily comes to mind is the public’s use of this reliable assistant who goes by the name of Siri. There is no lack of frustration and hilarity when Siri butchers the simple tasks and requests we throw her way.

As AI increases in power and complexity, having systems that are transparent and accountable is increasingly important. Explainable AI is one step towards making AI more trustworthy and reliable.

The process of explaining what a machine-learning system has learned is not an easy one. It requires a deep understanding of both the machine-learning system and the domain in which it is being used. Yet it is necessary in order to make AI understandable and useful.

Just imagine having an AI do your taxes or write you a sonnet or diagnose you with a deadly virus and not being able to peek under the hood and see how it came up with its conclusion—that’s terrifying.