Introduction

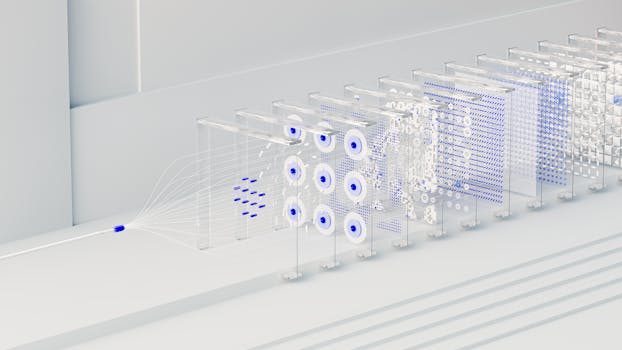

Debugging caching systems is a critical aspect of maintaining the performance and reliability of modern software applications. Caching, a technique used to store frequently accessed data in a temporary storage area for quick retrieval, can significantly enhance system efficiency. However, it also introduces the risk of stale data, where outdated or incorrect information is served to users. Stale data can lead to inconsistent application behavior, erroneous outputs, and a poor user experience. This introduction delves into the complexities of debugging caching systems, focusing on the challenges posed by stale data and the strategies employed to identify and resolve these issues, ensuring data integrity and system robustness.

Identifying And Resolving Stale Data Issues In Caching Systems

In the realm of software engineering, caching systems play a pivotal role in enhancing performance by temporarily storing frequently accessed data. However, these systems are not without their challenges, one of the most notorious being the issue of stale data. Stale data refers to outdated or no longer valid information that remains in the cache, leading to inconsistencies and potential errors in the application. Identifying and resolving stale data issues is crucial for maintaining the integrity and reliability of any system that relies on caching.

To begin with, identifying stale data in a caching system requires a keen understanding of the data lifecycle and the caching mechanisms in place. One common method is to implement cache expiration policies, such as Time-to-Live (TTL) settings, which automatically invalidate cached data after a specified period. However, TTL settings alone may not be sufficient, as they do not account for changes in the underlying data source. Therefore, it is essential to monitor cache hit and miss rates, as a sudden increase in cache misses could indicate that the cached data is no longer relevant.

Another effective approach to identifying stale data is through cache versioning. By associating a version number with each cached item, the system can easily determine whether the cached data is up-to-date. When the underlying data changes, the version number is incremented, and any subsequent cache lookups can compare the version numbers to decide whether to use the cached data or fetch fresh data from the source. This method provides a more dynamic and responsive way to manage cache validity.

Once stale data has been identified, resolving the issue involves several strategies. One of the most straightforward methods is cache invalidation, which explicitly removes outdated data from the cache. This can be done manually or through automated processes triggered by changes in the data source. For instance, a database update could trigger a cache invalidation event, ensuring that the next request retrieves the most current data.

In addition to invalidation, cache refreshing is another technique to address stale data. This involves periodically updating the cached data with fresh information from the source, either through scheduled tasks or on-demand requests. While this approach can reduce the likelihood of serving stale data, it also introduces additional overhead, as frequent refreshes can negate some of the performance benefits of caching.

Moreover, implementing a hybrid strategy that combines both invalidation and refreshing can offer a balanced solution. For example, a system could use TTL settings for regular expiration, versioning for dynamic validation, and periodic refreshes for critical data that requires high accuracy. This multi-faceted approach ensures that the cache remains as current as possible while minimizing the performance impact.

Furthermore, leveraging advanced caching techniques such as write-through and write-behind caching can also mitigate stale data issues. In write-through caching, any updates to the data source are immediately written to the cache, ensuring consistency. Write-behind caching, on the other hand, allows for asynchronous updates to the data source, which can improve performance but requires careful management to prevent stale data.

In conclusion, addressing stale data in caching systems is a multifaceted challenge that requires a combination of identification and resolution strategies. By implementing robust cache expiration policies, versioning, invalidation, refreshing, and advanced caching techniques, developers can significantly reduce the risk of stale data and maintain the reliability and performance of their applications. As caching systems continue to evolve, staying vigilant and proactive in managing stale data will remain a critical aspect of software engineering.

Best Practices For Preventing Stale Data In Caching Mechanisms

Preventing stale data in caching mechanisms is a critical aspect of maintaining the integrity and performance of any system that relies on cached information. Stale data, which refers to outdated or incorrect information stored in a cache, can lead to a myriad of issues, including incorrect application behavior, data inconsistency, and user dissatisfaction. To mitigate these risks, it is essential to adopt best practices that ensure the cache remains synchronized with the underlying data source.

One fundamental practice is to implement an appropriate cache invalidation strategy. Cache invalidation is the process of removing or updating cached data when the underlying data changes. There are several strategies to consider, such as time-to-live (TTL), where cached data is automatically invalidated after a specified period. This approach is simple to implement and can be effective in scenarios where data changes are predictable. However, it may not be suitable for all use cases, particularly those requiring real-time data accuracy.

Another strategy is write-through caching, where data is simultaneously written to both the cache and the underlying data store. This ensures that the cache always contains the most recent data. However, this method can introduce additional latency, as every write operation must be performed twice. Alternatively, write-behind caching allows data to be written to the cache first and then asynchronously to the data store. While this can improve write performance, it introduces the risk of data loss if the system fails before the write operation completes.

Cache coherence protocols, such as the MESI (Modified, Exclusive, Shared, Invalid) protocol, can also be employed to maintain consistency between the cache and the data store. These protocols ensure that any changes to the data are propagated to all relevant caches, thereby preventing stale data. However, implementing such protocols can be complex and may require significant overhead.

Monitoring and logging are indispensable tools in the fight against stale data. By continuously monitoring cache performance and logging cache hits, misses, and invalidations, administrators can gain valuable insights into the cache’s behavior. This information can be used to fine-tune cache configurations and identify potential issues before they escalate. Additionally, setting up alerts for unusual patterns, such as a sudden increase in cache misses, can help detect and address stale data problems promptly.

Another best practice is to design the system with idempotency in mind. Idempotent operations ensure that repeated execution of the same operation yields the same result, regardless of the number of times it is performed. This characteristic is particularly useful in caching systems, as it allows for safe retries of failed operations without risking data inconsistency.

Furthermore, leveraging distributed caching solutions can enhance the reliability and scalability of the caching system. Distributed caches, such as Redis or Memcached, can spread the cached data across multiple nodes, reducing the risk of a single point of failure and improving fault tolerance. However, it is crucial to implement proper synchronization mechanisms to ensure that all nodes have a consistent view of the data.

Lastly, regular cache audits and reviews are essential to maintaining a healthy caching system. Periodically reviewing cache configurations, invalidation policies, and performance metrics can help identify areas for improvement and ensure that the caching system continues to meet the application’s requirements.

In conclusion, preventing stale data in caching mechanisms requires a multifaceted approach that includes implementing robust invalidation strategies, employing cache coherence protocols, monitoring and logging cache activity, designing for idempotency, leveraging distributed caching solutions, and conducting regular audits. By adhering to these best practices, organizations can minimize the risk of stale data and ensure that their caching systems remain reliable and performant.

Tools And Techniques For Debugging Stale Data In Caching Systems

Debugging caching systems, particularly when dealing with stale data, can be a complex and challenging task. Stale data, which refers to outdated or incorrect information being served from the cache, can lead to significant issues in application performance and user experience. To effectively address these problems, it is essential to employ a variety of tools and techniques designed specifically for debugging caching systems.

One of the primary tools for debugging stale data in caching systems is logging. By implementing comprehensive logging mechanisms, developers can track cache hits and misses, as well as the timestamps of when data was cached and when it was last accessed. This information is invaluable for identifying patterns and pinpointing the exact moment when data becomes stale. Additionally, logs can reveal discrepancies between the cache and the underlying data store, providing further insights into potential causes of stale data.

Another crucial technique involves the use of cache monitoring tools. These tools offer real-time visibility into the state of the cache, including metrics such as cache hit ratios, eviction rates, and memory usage. By continuously monitoring these metrics, developers can detect anomalies that may indicate stale data issues. For instance, a sudden drop in the cache hit ratio could suggest that the cache is serving outdated information, prompting further investigation.

Moreover, employing cache invalidation strategies is essential for preventing stale data. Cache invalidation ensures that outdated data is removed from the cache and replaced with fresh information from the data store. There are several invalidation strategies to consider, including time-based expiration, where cached data is automatically invalidated after a specified period, and event-based invalidation, which triggers cache updates in response to specific events such as data changes in the underlying store. Implementing these strategies can significantly reduce the likelihood of stale data persisting in the cache.

In addition to these techniques, utilizing cache debugging tools can greatly enhance the process of identifying and resolving stale data issues. Tools such as RedisInsight for Redis or Memcached Manager for Memcached provide detailed insights into the cache’s internal operations. These tools allow developers to inspect the contents of the cache, view key-value pairs, and analyze cache performance metrics. By leveraging these capabilities, developers can gain a deeper understanding of how the cache is functioning and identify any inconsistencies that may be contributing to stale data.

Furthermore, employing automated testing frameworks can help in detecting stale data issues before they impact production systems. By writing test cases that simulate various scenarios, such as data updates and cache invalidation events, developers can ensure that the caching system behaves as expected. Automated tests can also be integrated into continuous integration and deployment pipelines, providing ongoing validation of the caching system’s integrity.

Lastly, collaboration and knowledge sharing among team members play a vital role in debugging caching systems. Regular code reviews, pair programming sessions, and knowledge-sharing meetings can help disseminate best practices and debugging techniques across the team. By fostering a culture of collaboration, teams can collectively address stale data issues more effectively and ensure that caching systems remain robust and reliable.

In conclusion, debugging stale data in caching systems requires a multifaceted approach that combines logging, monitoring, invalidation strategies, specialized debugging tools, automated testing, and collaborative practices. By leveraging these tools and techniques, developers can effectively identify and resolve stale data issues, ensuring that caching systems deliver accurate and up-to-date information to users.

Q&A

1. **What is stale data in caching systems?**

Stale data refers to outdated or expired information that remains in the cache, leading to inconsistencies between the cached data and the actual data source.

2. **How can stale data affect application performance?**

Stale data can lead to incorrect application behavior, user frustration, and potential data integrity issues, as the application may rely on outdated information for critical operations.

3. **What are common strategies to prevent stale data in caching systems?**

Common strategies include setting appropriate cache expiration times (TTL), implementing cache invalidation mechanisms, and using cache coherence protocols to ensure data consistency.Debugging caching systems, particularly when dealing with stale data, is a complex but crucial task to ensure data consistency and system reliability. Stale data can lead to significant issues such as outdated information being served to users, which can undermine trust and system functionality. Effective debugging requires a thorough understanding of the caching mechanisms, proper invalidation strategies, and robust monitoring tools to detect and resolve stale data issues promptly. By implementing best practices and continuously refining the caching strategy, developers can mitigate the risks associated with stale data and maintain the integrity of the system.