Big Data Bungles: Processing and Analyzing Large Data Sets

Big data is a term used to describe large, complex data sets that are difficult to process and analyze using traditional methods. These data sets can come from a variety of sources, such as social media, sensors, and transaction records.

The sheer volume of big data can make it difficult to process and analyze. Traditional methods, such as relational databases, are not designed to handle data sets of this size. This can lead to errors and inconsistencies in the data, which can have serious consequences.

In addition to the volume, big data is often complex and unstructured. This means that it does not follow a regular format, which can make it difficult to understand and extract meaning from the data.

The combination of volume and complexity makes big data a major challenge for businesses. However, there are a number of tools and techniques that can be used to process and analyze big data. These tools can help businesses to identify trends, patterns, and anomalies in the data, which can be used to improve decision-making.

Despite the challenges, big data has the potential to revolutionize the way businesses operate. By harnessing the power of big data, businesses can gain a competitive advantage and make better decisions.

Here are some of the big data bungles that can occur when processing and analyzing large data sets:

- Data accuracy: Big data sets are often prone to errors and inconsistencies. This can be due to a number of factors, such as data entry errors, sensor malfunctions, and transmission errors.

- Data completeness: Big data sets are often incomplete. This can be due to missing values, data that is not collected, or data that is deleted.

- Data accessibility: Big data sets are often difficult to access. This can be due to technical barriers, such as data silos, or legal barriers, such as privacy regulations.

- Data security: Big data sets are often vulnerable to security breaches. This can be due to a number of factors, such as weak security controls or malicious attacks.

- Data privacy: Big data sets can contain sensitive personal information. This information can be used to track individuals, identify patterns of behavior, and make predictions.

These big data bungles can have serious consequences for businesses. For example, data accuracy errors can lead to incorrect decisions being made, data completeness issues can lead to biased results, and data accessibility problems can prevent businesses from using data to make informed decisions.

Businesses can avoid these big data bungles by taking steps to improve data quality, completeness, accessibility, security, and privacy. By implementing these measures, businesses can ensure that they are getting the most value from their big data investments.## Big Data Bungles: Processing And Analyzing Large Data Sets

Executive Summary:

Enterprises across the globe collect immense amounts of data every single day. This data has the potential to provide valuable insights that can help businesses improve their operations, make better decisions, and gain a competitive advantage. However, processing and analyzing big data is a complex and challenging task. Many organizations struggle to effectively manage and utilize their data, leading to missed opportunities and even business failures.

Introduction:

In today’s digital age, data is more important than ever before. Businesses of all sizes are collecting vast amounts of data from a variety of sources, including customer transactions, social media, and sensor data. This data has the potential to provide businesses with valuable insights into their customers, their operations, and the market as a whole. However, processing and analyzing big data is a complex and challenging task. Many businesses lack the necessary expertise and resources to effectively manage and utilize their data.

FAQs:

- What is big data?

Big data refers to datasets that are too large or complex to be processed using traditional data processing applications. Big data is often characterized by its volume, velocity, and variety.

- Why is big data important?

Big data can provide businesses with valuable insights into their customers, their operations, and the market as a whole. This data can help businesses improve their decision-making, optimize their operations, and gain a competitive advantage.

- What are the challenges of big data?

The challenges of big data include:

* **Volume:** Big data datasets are often very large, making it difficult to store and process them.

* **Velocity:** Big data datasets are often generated in real time, making it difficult to keep up with the pace of data growth.

* **Variety:** Big data datasets often contain a variety of data types, including structured data, unstructured data, and semi-structured data. This variety makes it difficult to integrate and analyze data from different sources.Top 5 Subtopics:

Data Integration

Data integration is the process of combining data from multiple sources into a single, unified view. This process can be challenging, as data from different sources often has different formats and structures.

- Data quality: Data quality is essential for effective data integration. Poor-quality data can lead to inaccurate results and wasted time.

- Data governance: Data governance is the process of managing and controlling data assets. This includes establishing policies and procedures for data collection, storage, and use.

- Metadata management: Metadata management is the process of managing data about data. This includes information about the data’s structure, format, and quality.

Data Storage

Data storage is the process of storing data in a way that allows it to be easily accessed and retrieved. Big data datasets often require specialized storage solutions, such as Hadoop or NoSQL databases.

- Storage capacity: Storage capacity is the amount of data that can be stored on a given storage device.

- Storage performance: Storage performance is the speed at which data can be accessed and retrieved from a given storage device.

- Storage cost: Storage cost is the cost of storing data on a given storage device.

Data Processing

Data processing is the process of converting raw data into a format that can be analyzed. Big data processing often requires specialized software and hardware.

- Data cleansing: Data cleansing is the process of removing errors and inconsistencies from data.

- Data transformation: Data transformation is the process of converting data from one format to another.

- Data reduction: Data reduction is the process of reducing the size of a data set without losing any important information.

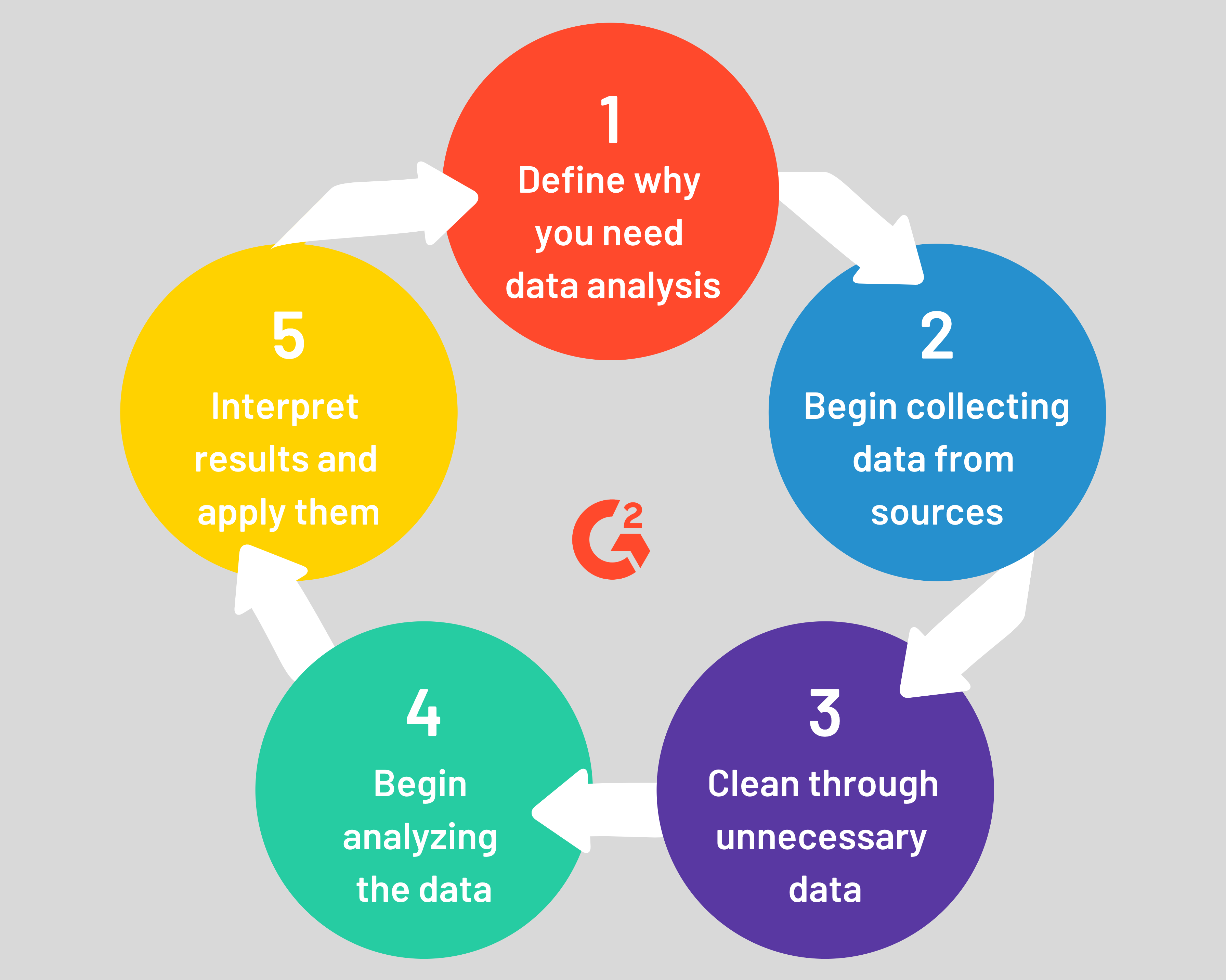

Data Analysis

Data analysis is the process of extracting insights from data. Big data analysis often requires specialized statistical and machine learning techniques.

- Exploratory data analysis: Exploratory data analysis is the process of exploring data to identify patterns and trends.

- Predictive analytics: Predictive analytics is the process of using data to predict future events.

- Prescriptive analytics: Prescriptive analytics is the process of using data to recommend actions.

Data Visualization

Data visualization is the process of representing data in a graphical format. This can help make data easier to understand and communicate.

- Data visualization tools: There are a variety of data visualization tools available, including Tableau, Power BI, and Google Data Studio.

- Data visualization techniques: There are a variety of data visualization techniques, including charts, graphs, and maps.

- Data visualization best practices: There are a number of best practices for creating effective data visualizations.

Conclusion:

Processing and analyzing big data is a complex and challenging task, but it is essential for businesses that want to succeed in today’s digital age. It should be given to professional big data firms. By following the best practices outlined in this article, businesses can overcome the challenges of big data and unlock its full potential.

Keyword Tags:

- Big data

- Data processing

- Data analysis

- Data integration

- Data storage