Batch Processing Breakdowns: Managing Large-scale Data Operations

Executive Summary

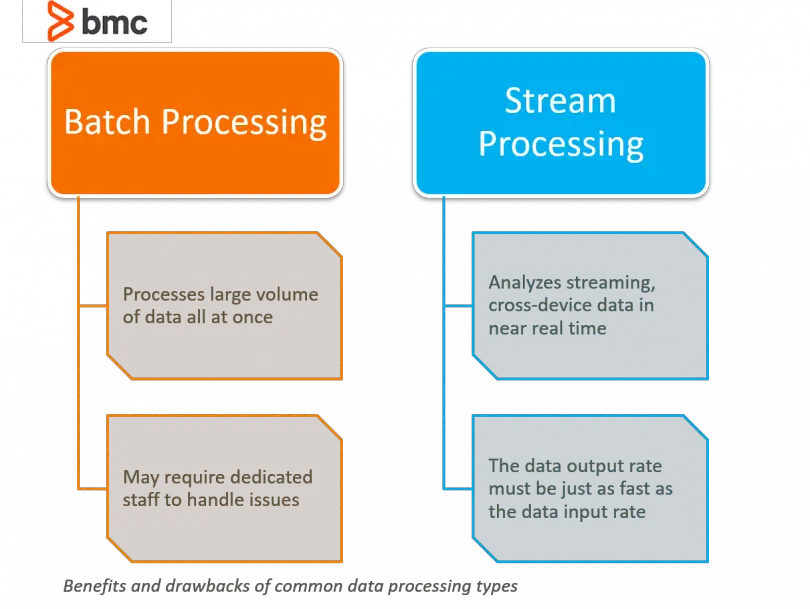

Batch processing is a fundamental aspect of data management, enabling the efficient processing of large volumes of data at scale. However, managing such complex operations can present challenges. This article delves into the common issues encountered in batch processing and provides effective strategies to address them, optimizing performance and ensuring data integrity.

Introduction

As organizations navigate the rapidly expanding data landscape, batch processing has become indispensable for managing vast amounts of information. From financial transactions to customer analytics, batch processing empowers businesses to analyze, transform, and aggregate data in a controlled and cost-effective manner. However, navigating the intricacies of batch processing can be daunting, often leading to errors and inefficiencies. This comprehensive article explores the critical challenges associated with batch processing and offers actionable solutions to overcome these obstacles, maximizing the potential of data operations.

FAQs

-

What is batch processing?

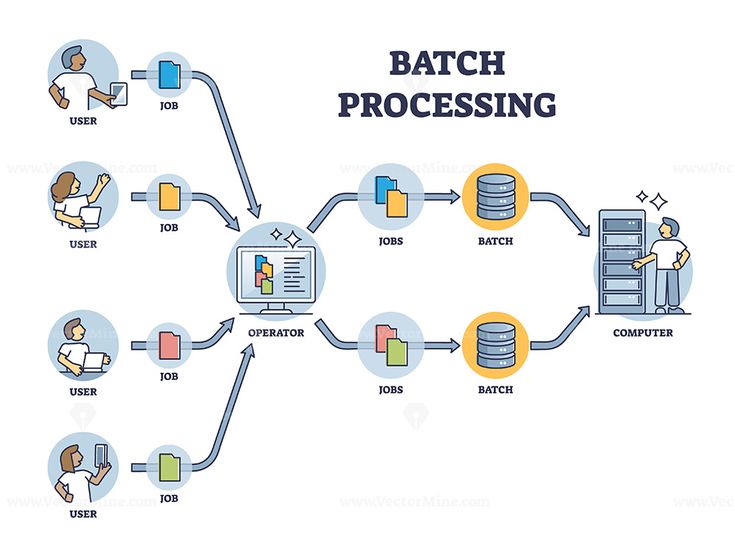

Batch processing is a method of data processing where a group of similar tasks (known as a batch) is executed collectively, often in an automated sequence. This approach enhances efficiency by minimizing system overhead and optimizing resource utilization. -

What types of batch processing applications exist?

Batch processing finds application in various domains, including:- Data analytics and reporting

- Data warehousing and consolidation

- Data migration and integration

- Financial and transactional processing

- System maintenance and updates

-

What are the benefits of batch processing?

Batch processing offers several advantages, including:- Reduced costs: Processing large volumes of data in batches is more cost-efficient than individual processing.

- Improved performance: Batch processing optimizes system resources, maximizing throughput and minimizing latency.

- Increased reliability: Executing tasks in a controlled batch environment enhances fault tolerance and ensures data integrity.

Overcoming Batch Processing Challenges

Data Quality and Validation

Data quality plays a pivotal role in the success of batch processing operations. Ensuring accurate and consistent data is paramount for reliable and actionable results. Key considerations include:

- Data validation: Implementing robust data validation mechanisms to identify and correct errors.

- Data standardization: Establishing consistent data formats and standards to ensure interoperability.

- Data cleansing: Removing duplicate, outdated, or irrelevant data to improve data integrity.

- Data completeness: Verifying that all necessary data elements are present and complete.

Performance Optimization

Batch processing efficiency directly impacts data processing pipelines and overall system performance. Optimizing performance involves:

- Resource management: Allocating resources efficiently to avoid bottlenecks and maximize throughput.

- Concurrency optimization: Balancing the number of tasks executed concurrently to maximize resource utilization.

- Data partitioning: Dividing large datasets into smaller, manageable chunks to improve processing speed.

- Indexing strategies: Employing indexing techniques to accelerate data retrieval and reduce processing time.

Error Handling and Recovery

In the realm of batch processing, errors are inevitable. Robust error handling mechanisms ensure minimal impact on data integrity and system stability. Key strategies include:

- Exception management: Defining clear exception handling processes to identify and resolve errors efficiently.

- Retry mechanisms: Implementing automatic retries for failed tasks to enhance fault tolerance.

- Data rollback: Enabling data rollback capabilities to revert changes in the event of errors, preserving data integrity.

- Job monitoring: Establishing real-time job monitoring systems to detect and respond to errors promptly.

Scheduling and Dependency Management

Batch processing often involves complex dependencies and scheduling requirements. Effective management is crucial for ensuring timely and reliable execution. Important considerations include:

- Job scheduling: Implementing automated job scheduling systems to ensure timely and orderly execution of batch tasks.

- Dependency mapping: Clearly defining and managing task dependencies to avoid scheduling conflicts.

- Load balancing: Distributing batch tasks across multiple servers or nodes to optimize resource utilization and minimize processing time.

- Concurrency control: Managing task concurrency to prevent overloading and ensure efficient execution.

Security and Compliance

Data security and compliance are paramount in batch processing operations. Robust measures ensure the protection of sensitive data and adherence to regulatory requirements. Key considerations include:

- Access control: Implementing access controls to restrict unauthorized access to data and system resources.

- Data encryption: Encrypting sensitive data both at rest and in transit to safeguard against data breaches.

- Audit logging: Maintaining detailed audit logs to track user activities and data access patterns.

- Compliance monitoring: Continuously monitoring systems to ensure adherence to regulatory requirements and industry standards.

Conclusion

Batch processing plays a critical role in managing large-scale data operations, enabling efficient data processing at scale. However, navigating the complexities of batch processing can be challenging. By understanding the common issues and implementing effective strategies, organizations can optimize batch processing performance, ensure data integrity, and maximize the potential of their data operations. Embracing the best practices outlined in this article empowers businesses to harness the full power of data, driving informed decision-making and achieving operational excellence.

Keyword Tags

- Batch processing

- Data management

- Data quality

- Performance optimization

- Error handling